Generative AI Energy Consumption: Challenges and Sustainable Solutions

The Energy Challenge of Generative AI

Imagine a world increasingly reliant on artificial intelligence – from self-driving cars to personalized medicine. This future hinges on powerful AI, but at what cost? The energy consumption of generative AI, particularly large language models (LLMs) and diffusion models used for image generation, is a growing concern. This article dives deep into this issue, exploring the factors driving AI’s power demands, its environmental impact, and the crucial strategies being developed for a more sustainable AI future.

What is the primary driver of energy consumption in generative AI?

The training phase, where AI models learn from massive datasets, is the most energy-intensive part of the AI lifecycle.

What are some practical steps to reduce AI’s environmental impact?

Practical steps include using energy-efficient hardware (like TPUs), optimizing training processes (e.g., transfer learning), implementing advanced cooling in data centers, and transitioning to renewable energy sources.

How can smaller organizations implement sustainable AI practices?

Smaller organizations can leverage cloud computing services that prioritize renewable energy, utilize pre-trained models to reduce training costs, and focus on model optimization techniques like quantization and pruning.

Understanding the Energy Footprint of AI: Training vs. Inference

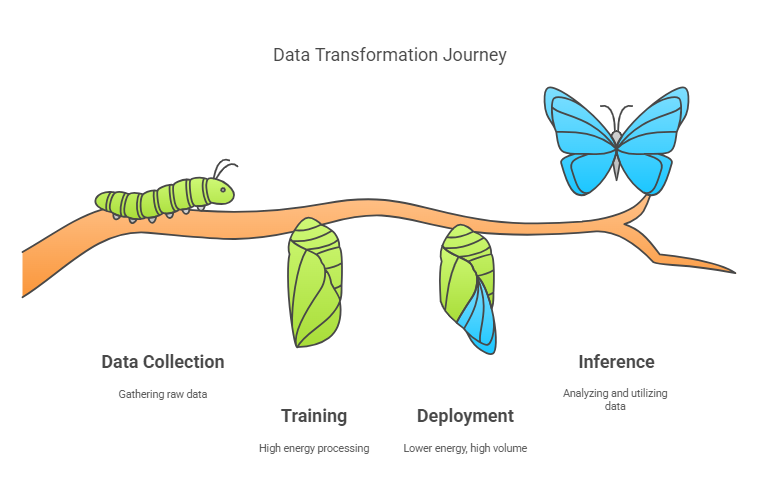

The energy footprint of AI encompasses the total energy consumed throughout its lifecycle:

- Training: The computationally intensive process of teaching an AI model using massive datasets. This stage accounts for the vast majority of energy use. Think of it as the intensive learning phase of a student, requiring immense focus and energy.

- Inference: The process of using a trained model to make predictions or generate content. While less energy-intensive per interaction, the sheer volume of daily AI interactions contributes significantly to the overall energy footprint. This is like the student applying their learned knowledge in everyday tasks.

Several key factors drive this consumption:

- AI Hardware Energy Use: Specialized processors like GPUs (Graphics Processing Units) and TPUs (Tensor Processing Units) are the workhorses of AI. These chips, especially during training, consume substantial electricity.

- AI Training Energy Consumption Statistics: According to a study by Strubell et al. (2019), training a large language model can consume the equivalent energy of powering approximately 120 US homes for a year. This highlights the urgent need for more energy-efficient AI practices.

- AI Inference Energy: While individual inferences are less demanding, the cumulative effect of billions of daily AI interactions contributes significantly to the overall energy footprint.

AI Data Center Efficiency: The Foundation of a Green AI

AI computations primarily occur in massive data centers, making their efficiency paramount. Several factors influence AI Data Center Efficiency:

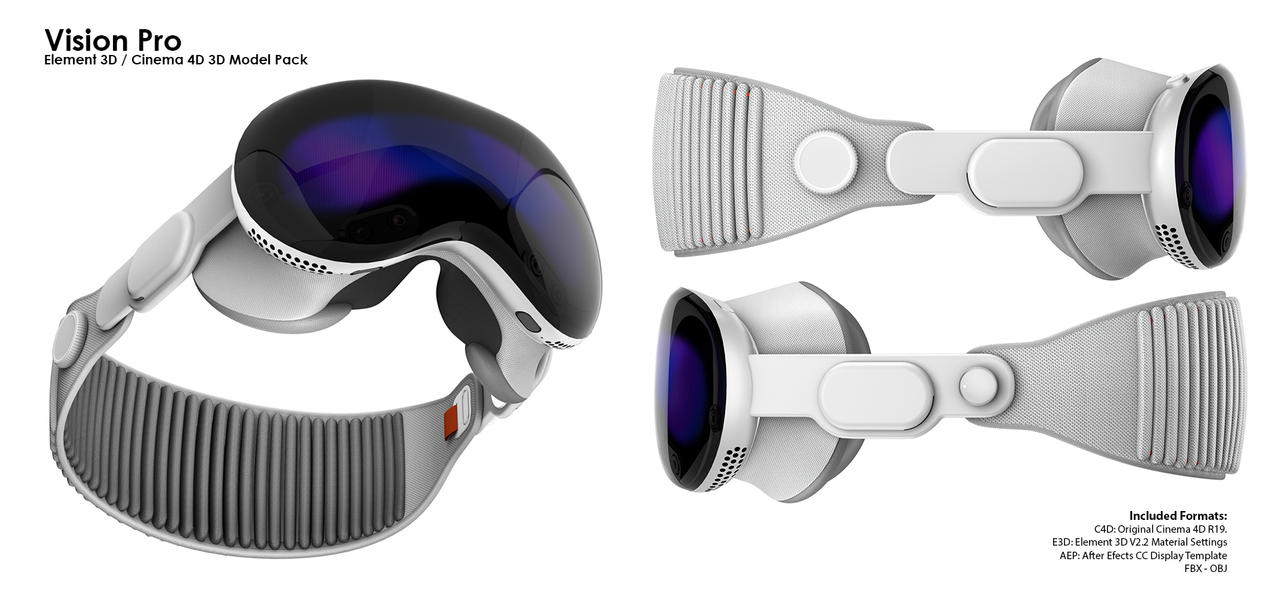

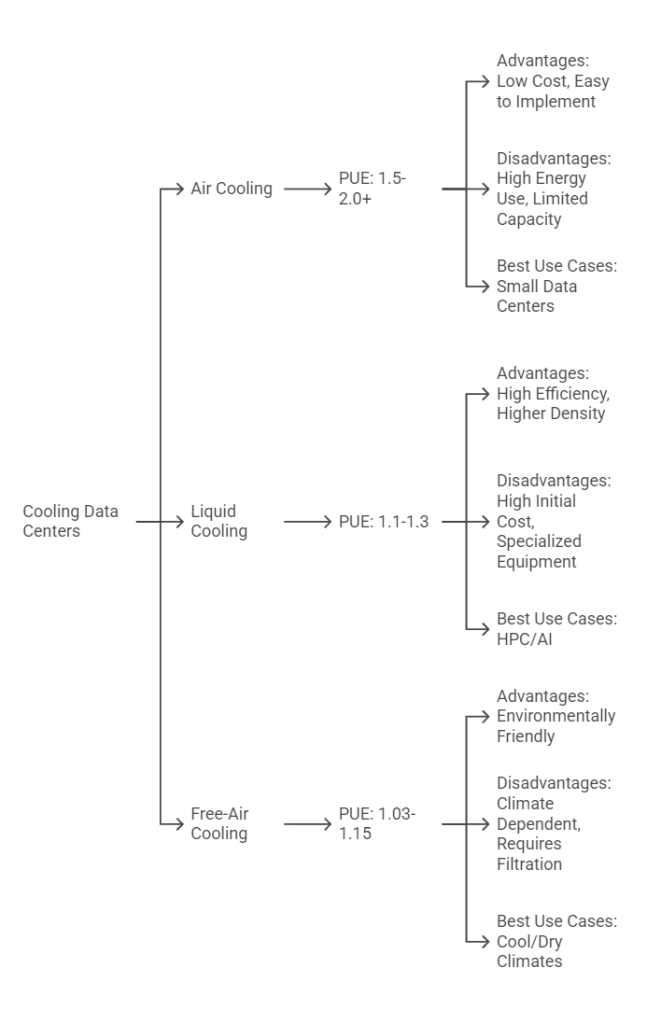

- AI Cooling Systems: Keeping powerful processors from overheating requires energy-intensive cooling systems. Traditional air conditioning is a major energy drain. Innovative solutions like liquid cooling (using liquids to dissipate heat more effectively) and free-air cooling (using outside air) are being implemented to improve efficiency and reduce Power Usage Effectiveness (PUE) – a metric that measures how efficiently a data center uses energy (ideally close to 1.0).

- Power Management for AI: Effective power management optimizes energy use through dynamic frequency scaling (adjusting processor speed based on workload), workload optimization (distributing tasks efficiently), and optimized power distribution within the data center.

- Renewable Energy for AI: Powering data centers with renewable energy sources like wind and solar is crucial for reducing AI’s carbon footprint. Many tech giants are investing heavily in renewable energy to power their AI operations.

AI Computational Efficiency: Smarter Algorithms, Less Power

Improving the efficiency of AI algorithms is equally vital:

- AI Model Optimization: Several techniques optimize models:

- Model Pruning: Removing less important connections in the neural network to reduce computational load. (Imagine trimming unnecessary branches from a tree to reduce its size and resource needs.)

- Quantization: Reducing the precision of numbers used in calculations, simplifying computations. (Like using rounded numbers instead of decimals in calculations.)

- Knowledge Distillation: Training smaller, more efficient models to mimic the performance of larger, more complex ones. (Like a student learning from a teacher’s notes rather than the entire textbook.)

Visual representation of Model Pruning or Quantization

+---------------------------------+ +---------------------------------+

| Model Pruning | | Quantization |

+---------------------------------+ +---------------------------------+

| | | |

| O---X---O | | 3.14159 |

| / \ / \ / \ | | | |

| O---O---O---O | | 3.14 |

| / \ / \ / \ / \ | | | |

| O---O---O---O---O | | 3.1 |

| \ / \ / \ / \ / | | | |

| O---O---O---O | | 3 |

| \ / \ / \ / | | |

| O---X---O | | |

| | | |

| X = Pruned Connection | | |

| | | |

| Fewer Connections = Less Comput.| | Less Precision = Less Storage &|

| | | Faster Calculations |

+---------------------------------+ +---------------------------------+

- Eco-friendly AI Algorithms: Researchers are developing algorithms specifically designed for energy efficiency, focusing on minimizing computations and memory access.

- AI Inference Energy Optimization: Techniques like model compression and efficient hardware usage during inference are being actively researched to minimize energy use during prediction and generation.

Sustainable AI Practices: A Holistic Approach

Creating truly sustainable AI requires a multi-pronged approach:

- Green AI Initiatives: Numerous research initiatives and collaborations promote sustainable AI practices, including sharing best practices, developing AI energy efficiency benchmarks, and advocating for policies supporting green AI.

- AI Energy Monitoring: Accurate monitoring of AI energy usage is crucial. Standardized AI Energy Usage Metrics are being developed to facilitate comparison and improvement.

- AI and Energy Policy: Policy and regulation can incentivize renewable energy use, set data center efficiency standards, and fund green AI research. The EU’s focus on green technology in data centers is a prime example of regional efforts.

- AI Carbon Emissions and AI Energy Reduction Techniques: Reducing AI’s carbon footprint requires a combination of renewable energy, improved energy efficiency, and implementing AI Energy Reduction Techniques throughout the AI lifecycle.

AI Energy Costs: An AI Energy Cost Analysis

The high energy consumption of AI translates to significant AI Energy Costs for companies operating large-scale AI systems. This drives research and development towards more energy-efficient solutions.

Key Strategies for Reducing Generative AI Energy Consumption:

- Generative AI Energy-Efficient Hardware: Using the latest generation of GPUs and TPUs, designed for AI workloads and energy efficiency. Google’s TPUs, for example, are designed with power efficiency in mind, offering improved performance per watt compared to general-purpose processors.

- AI Training Energy Consumption Reduction: Techniques like transfer learning (reusing pre-trained models) and efficient data management reduce training time and energy consumption.

- AI Data Center Efficiency Techniques: Investing in innovative cooling technologies like liquid cooling and free-air cooling minimizes energy used for data center cooling.

- Renewable Energy for Sustainable AI Development: Transitioning to renewable energy sources to power AI operations is crucial for reducing the carbon footprint.

- AI Model Energy Consumption Reduction: Prioritizing research and development of AI Model Optimization techniques and Eco-friendly AI Algorithms.

Case Studies: Leading the Way in Sustainable AI

- DeepMind: It has made significant strides in reducing AI training energy. By optimizing their infrastructure and algorithms, they’ve reported substantial reductions in energy usage for training large language models. This demonstrates the potential for significant savings through focused optimization.

- Google: Google’s investment in TPUs and focus on data center efficiency, combined with their commitment to carbon-free energy, showcases a comprehensive approach to sustainable AI. They have published research on the energy efficiency of their AI hardware and data centers, providing valuable insights for the industry.

- Microsoft: Microsoft has committed to becoming carbon negative by 2030 and is actively working on energy-efficient AI hardware and software. Their research on “sparks of AGI” also explores potential pathways to more efficient AI architectures.

Data and Statistics:

- According to a study by Strubell et al. (2019), training a large language model can consume the equivalent energy of powering approximately 120 US homes for a year.

- Data centers globally account for approximately 1-2% of global electricity consumption, with AI workloads contributing a growing portion (Jones, 2018).

- Google reports that their TPUs offer up to 30-80x better performance per watt compared to contemporary CPUs (Jouppi et al., 2017).

Glossary of Terms:

- PUE (Power Usage Effectiveness): A metric that measures how efficiently a data center uses energy. A PUE of 1.0 represents perfect efficiency.

- Model Pruning: A technique for reducing the size and complexity of a neural network by removing less important connections.

- Knowledge Distillation: A technique for training a smaller, more efficient model to mimic the behavior of a larger, more complex model.

- Transfer Learning: Reusing a pre-trained model for a new task, reducing the need for extensive training from scratch.

- Carbon Offsetting: Compensating for carbon emissions by investing in projects that reduce emissions elsewhere.

- Dynamic Frequency Scaling: Adjusting the operating frequency of a processor based on the workload, reducing power consumption during periods of low activity.

The “200 kWh Challenge” for AI (Metaphorical):

While not directly applicable to individual households, the concept of minimizing energy use is relevant to AI. The AI community is working towards a “200 kWh equivalent” for AI models—a metaphorical goal of drastically reducing the energy required for AI tasks.

How to Reduce GenAI Energy Costs:

Implementing the strategies outlined in this article, such as using energy-efficient hardware, optimizing training processes, and utilizing renewable energy, can significantly reduce AI energy costs for businesses.

How does AI affect the environment?

The increasing energy consumption of AI contributes to greenhouse gas emissions and exacerbates climate change. Transitioning to sustainable AI practices is essential to mitigate this impact.

Recent Developments in Low Power AI:

- Emerging Hardware: Companies are developing specialized AI chips with even greater energy efficiency, focusing on new architectures and manufacturing processes.

- Federated Learning: This approach trains AI models on decentralized data sources (like individual devices) without needing to centralize the data, potentially reducing energy consumption associated with data transfer.

- Neuromorphic Computing: This emerging field aims to create chips that mimic the human brain’s structure, which could offer significant energy efficiency advantages.

Regional Efforts in Green Tech and AI:

- European Union: The EU is actively promoting green technologies and setting strict energy efficiency standards for data centers, pushing for more sustainable AI practices within the region.

- India: India is making significant strides in renewable energy adoption, which can support the growth of a more sustainable AI sector within the country.

How to Implement Sustainable AI Practices in Your Organization

This step-by-step guide helps organizations of all sizes adopt environmentally friendly AI practices to reduce their energy consumption and carbon footprint.

Assess Your Current AI Energy Footprint

Begin by understanding your current energy consumption related to AI workloads. Utilize available monitoring tools and metrics to track the energy used in both training and inference phases of your AI models.

Prioritize Energy-Efficient Hardware and Cloud Services

Choose GPUs, TPUs, or cloud computing instances that offer high performance per watt. Select providers committed to renewable energy sources, ensuring that your AI infrastructure is both powerful and environmentally sustainable.

Optimize AI Algorithms and Models

Implement techniques such as model pruning, quantization, and knowledge distillation to reduce the computational demands of your models. These strategies help streamline AI processes, lowering both energy usage and processing time.

Advocate for Green AI Policies

Support policies that incentivize the use of renewable energy in data centers and encourage research into sustainable AI technologies. Engagi

By following these steps, your organization can make significant strides in implementing more sustainable AI practices while maintaining performance and innovation.

The Role of Policy and Regulation in Green AI:

Government policies and regulations play a vital role in driving sustainable AI practices. This includes:

- Incentives for Renewable Energy: Tax breaks, subsidies, and other incentives can encourage data centers to transition to renewable energy sources.

- Energy Efficiency Standards: Setting mandatory energy efficiency standards for data centers can drive innovation in cooling technologies and power management.

- Funding for Green AI Research: Public funding for research into energy-efficient machine learning algorithms, hardware, and sustainable computing practices is essential.

- International Collaboration: Global cooperation on AI energy standards and best practices can ensure a consistent approach to sustainability.

Collaboration for a Sustainable AI Ecosystem:

Addressing AI’s energy challenge requires collaboration between various stakeholders:

- Tech Companies: Investing in research and development of energy-efficient AI technologies and committing to renewable energy.

- Researchers: Developing new algorithms, hardware, and optimization techniques.

- Policymakers: Creating policies and regulations that incentivize sustainable AI practices.

- Industry Organizations: Developing standards, best practices, and certifications for green AI.

Scaling Sustainable AI for All:

While large tech companies have the resources to invest heavily in sustainable AI, smaller organizations and startups can also take steps to reduce their energy footprint:

- Cloud Computing: Leveraging cloud providers that prioritize renewable energy can offer access to efficient infrastructure without the need for large capital investments.

- Pre-trained Models: Utilizing pre-trained models and fine-tuning them for specific tasks can significantly reduce training time and energy consumption.

- Open-Source Tools and Libraries: Utilizing open-source tools and libraries for model optimization can make these techniques accessible to a wider audience.

Conclusion: Building a Sustainable AI Future

The energy consumption of generative AI is a complex but crucial challenge. By focusing on AI Data Center Efficiency, AI Computational Efficiency, and Sustainable AI Practices, we can ensure AI’s benefits are realized without compromising the planet. Continuous research, innovation, and collaboration are essential. Can we unlock the full potential of AI while minimizing its environmental impact? The answer lies in our commitment to sustainable development.

Learn more about Green AI initiatives and support research into energy-efficient AI technologies. Consider supporting organizations like the Green Software Foundation (https://greensoftware.foundation/). Advocate for policies that promote sustainable AI development and encourage companies to prioritize green practices.

Downloadable PDF Offer

“Download our free PDF: ‘Top 5 Energy-Saving Tips for Businesses Using AI‘ for practical strategies to reduce your GenAI energy costs and contribute to a greener future.

References:

Jones, N. (2018). How to stop data centres from gobbling up the world’s electricity. Nature, 561(7722), 163-166.